Deploy your Scrapy spiders from GitHub

Up until now, your deployment process using Scrapy Cloud has probably been something like this: code and test your spiders locally, commit and push your changes to a GitHub repository, and finally deploy them to Scrapy Cloud using shub deploy. However, having the development and the deployment processes in isolated steps might bring you some issues, such as unversioned and outdated code running in production.

The good news is that, from now on, you can have your code automatically deployed to Scrapy Cloud whenever you push changes to a GitHub repository. All you have to do is connect your Scrapy Cloud project with a repository branch and voilà!

Scrapy Cloud’s new GitHub integration will help you ensure that your code repository and your deployment environments are always in sync, getting rid of the error-prone manual deployment process and also speeding up the development cycle.

Check out how to set up automatic deploys in your projects:

If you are not that into videos, have a look at this guide.

Improving your workflow with the GitHub integration

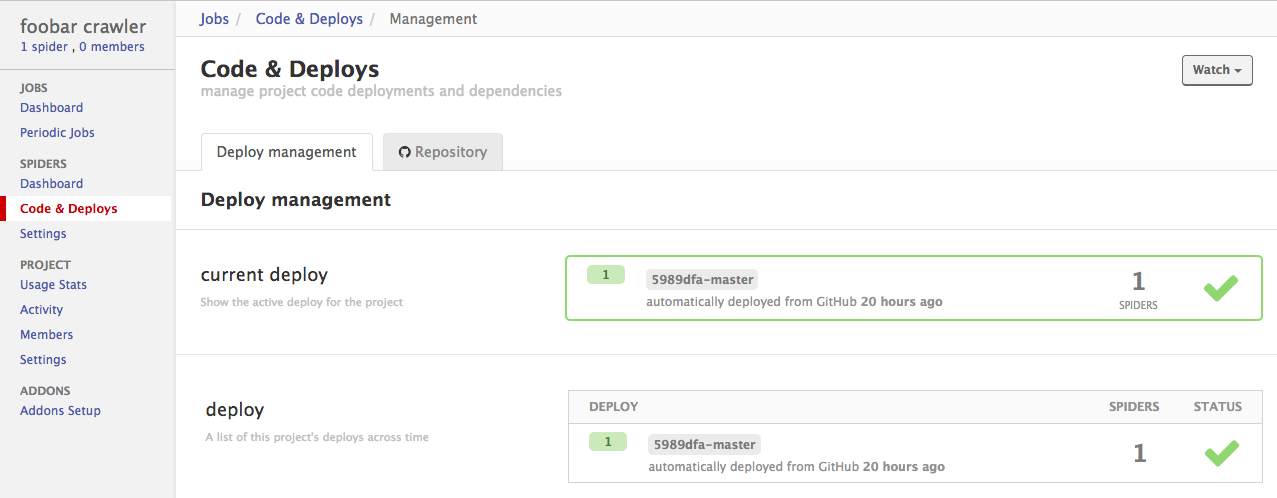

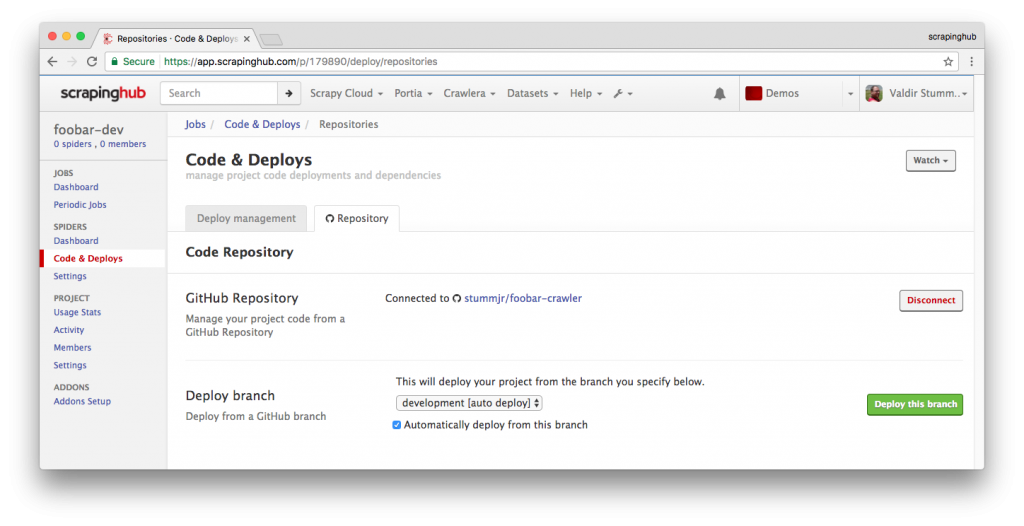

You could use this feature to set up a multi-stage deploy workflow integrated with your repository. Let's say you have a repo called foobar-crawler, with three main branches -- development, staging and master -- and you need one deployment environment for each one.

You create one Scrapy Cloud project for each branch:

foobar-devfoobar-stagingfoobar

And connect each of these projects with a specific branch from your foobar-crawler repository, as shown below for the development one:

Then, every time you push changes to one of these branches, the code is automatically deployed to the proper environment.

Wrapping up

If you have any feedback regarding this feature or the whole platform, leave us a comment.

Start deploying your Scrapy spiders from Github now.