We need data. And we need it faster than ever

The clock is always ticking in data collection. Web scraping developers know this better than anyone—complex and changing site structures, the moving target of anti-bot technology, and shifting client demands.

It’s a fact that many experienced web scraping teams still cling to the fragmented toolkit of multiple, single-purpose scraping tools, hoping they’ll make it to the next deadline. Data teams know that it’s inefficient to operate this way, but when you’re in the trenches, it’s difficult to prioritize tooling efficiency when you have deadlines to hit.

You’ve probably dabbled with Large Language Models (LLMs) to inject a bit of AI magic into the process, only to find that they’re a blunt instrument—expensive, unpredictable, and ill-suited for the nuanced demands of extracting vast amounts of data accurately. It’s like using a sledgehammer to crack an egg. The reality is, despite all the hype, LLMs weren’t built for this level of complexity in the web scraping world.

But what if there was a way to blend the flexibility of LLMs with the precision and efficiency of a tool specifically trained for the chaos of modern web data extraction? What if you could have the power of AI, tailored to handle the unique challenges of web scraping, without bleeding your budget dry?

Zyte has faced these pain points head-on for over a decade, building solutions that don’t just react to change but anticipate it. In this post, we'll unveil how our AI-powered approach redefines what’s possible in web scraping, making it faster, more reliable, and—crucially—cost-effective at scale.

Let’s explore why traditional methods fall short, why LLMs aren’t the silver bullet you might think, and how Zyte's AI-driven solution can finally give you the edge you’ve been searching for.

Will adding LLMs to the traditional tech stack work?

Traditional web scraping setups rely on multiple tools: web scraping frameworks, fleets of headless browsers, and proxy services, and rotation tools. While this system can work, it’s often expensive and frustrating for data teams that need fresh data quickly and at scale.

The two most common issues with this approach, even if you have an excellent framework, are that for each website you add to your pipeline:

Traditionally it costs days or weeks of developer hours, meaning it’s hard to add new sites quickly.

It contributes a significant and never-ending amount of maintenance work that builds up over time and cripples team productivity.

Every time a website changes its layout or anti-bot measures, your spiders break and you must fix them—until they break again. This endless cycle won’t be solved by new “unblockers” or proxy APIs either; these only handle ban management (and often not particularly cost-efficiently), and you still need to integrate other tools.

Given their success in web development tasks, some suggest large language models (LLMs) as an alternative. While LLMs offer flexibility and reduced maintenance compared to hand-coded scripts, they are prohibitively expensive and often inaccurate. They can also hallucinate, generating inaccurate data that needs manual verification, and their parsing may fail when website layouts change.

So, what’s the solution?

If they are too expensive to convert HTML to data at scale, what about using LLMs to generate scraping code?

This seems like a great compromise on paper. You build a system to run crawlers and have LLM generate the required parsing code. One major issue is that you still end up with hard-coded parsing rules that make your spiders brittle, which can (and will) break mid-crawl out of the blue, requiring the code to be regenerated before you can resume scraping.

In 2022, Zyte had been facing these challenges firsthand for years. With demand increasing and teams needing to create tens of thousands of spiders for clients, maintaining complex projects for global companies was a massive challenge—a challenge best solved by smarter automation and a Composite AI approach.

First, we launched machine-learning-powered Automatic parsing tools that could identify data on any page and map it to a complex schema quickly and accurately. Because they didn’t use hard-coded selectors like you might find in a typical Scrapy spider or BS4 project, they don’t break when the site changes its code.

Secondly, we launched Zyte API, which meant our clients could automate handling requests to avoid bans, and get the HTML the ML models needed to run. Because it automatically adapts to changing bans and uses the leanest set of proxies and browsers, it never gets blocked. You can use it on any site confident they can skip the trial and error of building a custom strategy and infrastructure for each website.

Lastly, we combined these tools using configurable and open-source smart spider templates written in Scrapy, which can be forked and customized as needed. This creates an end-to-end method to automate access, crawling, and parsing of data on websites.

This approach eliminated the majority of the work creating and maintaining spiders for common data types, such as products, articles, SERPs, and job listings.

After solving these problems, we made them available to the rest of the world as a product called AI Scraping.

Automated parsing and LLMs

When using Zyte’s spider templates, the parsing part of web data extraction gets completely automated by ML, so you still get your data no matter what changes on the target website, the navigation, or the anti-bot system, and without the need to write any xPaths or selectors.

Due to the adaptive nature of the spider templates, even if the website changes its layout in the middle of your crawl, it will still work. The system can identify any changes in the HTML structure and still find the data specified in the schema.

If you want data that isn’t included by default in the schema, you’re in complete control and can add custom data points to your spider in one of two ways:

Good ol’ Xpaths and selectors.

Custom attributes with LLM Prompts via Zyte API.

Legal compliance from the start

A compliance strategy in web scraping is a must, especially if done at scale. The more data collected, the greater the risks of facing legal problems if anything regarding laws and website terms is neglected.

Zyte API uses compliance-ready schemas from the start, meaning that most web data extraction projects initiated with this solution come with built-in compliance and peace of mind.

Enterprise customers receive a free compliance evaluation and can access web scraping advice anytime.

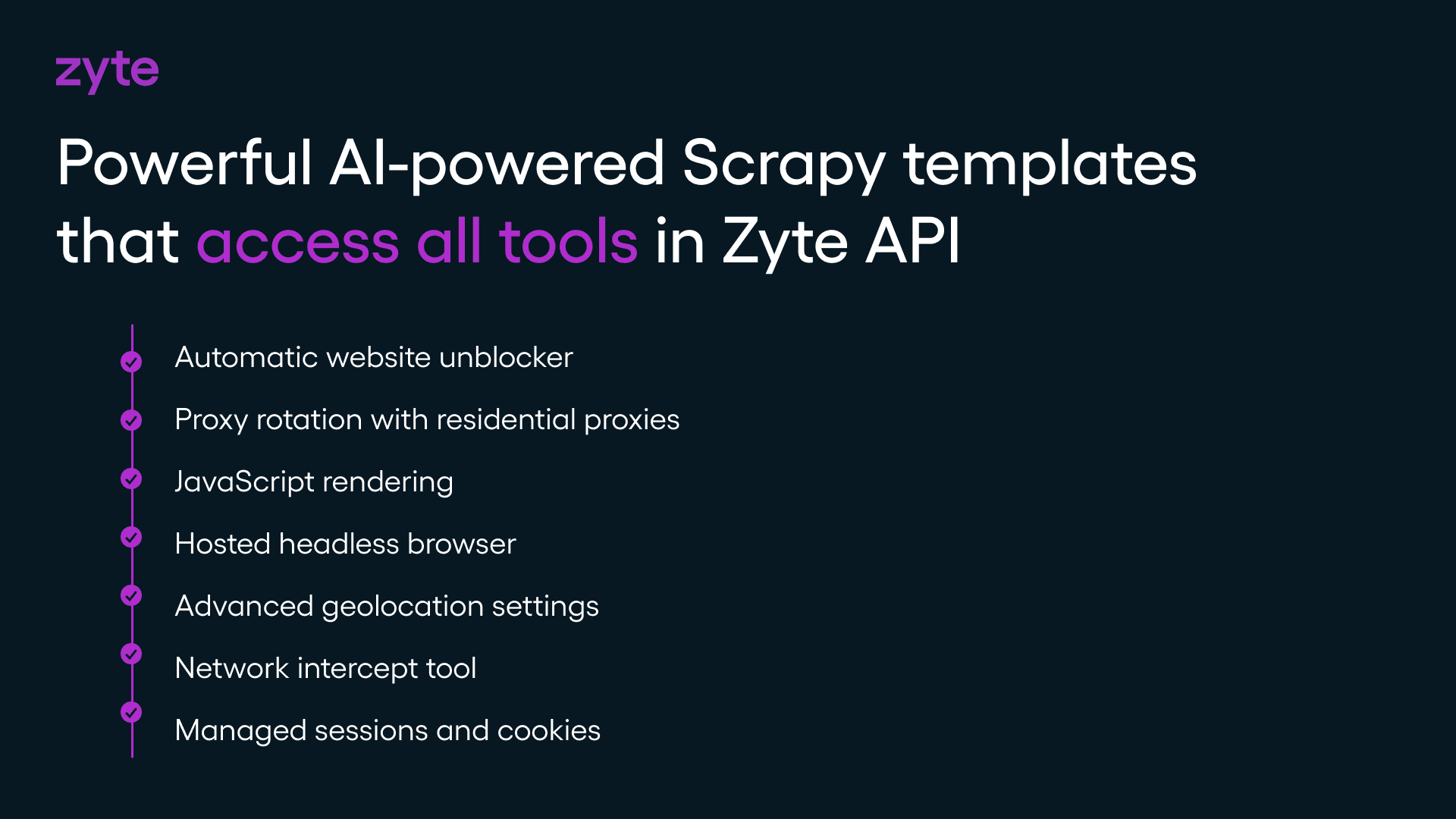

Access the Swiss-army knife of web scraping tools

Using Zyte’s AI-powered spider templates—AI Scraping—means that you can also easily access every tool within Zyte API:

A powerful ban management tool that unblocks any website.

Proxy rotation with residential proxies when needed.

JavaScript rendering for screenshot-taking.

A fully hosted headless browser with programmable actions.

Advanced geolocation settings serving IPs from more than 200 countries and regions.

A network intercept tool.

Managed sessions and cookies.

Scrapy Cloud for hosting and backing up your spiders.

Enterprise packages with priority support and volume discounts

Fully hosted API

It’s easy to scale if you have millions to spend on automating and using AI to scrape at scale, but it only makes sense if it's cost-effective and economically viable.

Zyte API has been built into the economics of scale, so developers can rely on our hosting and maintenance infrastructure to focus solely on data extraction.

Our solution handles large-scale web scraping with the same cost efficiency as traditional methods. Any extra cost from using our AI is quickly balanced by saving time, reducing maintenance, and getting data faster.

We offer a fully hosted and managed system that scales automatically. There’s no need for a dedicated team to manage hosting or infrastructure. Our solution grows with your needs, from small projects to enterprise-level demands, without any limits.

Powering massive data extraction projects across the globe

AI-powered spider templates were recently launched to the public, but Zyte developers have been using them to power our data extraction business for a few years.

In a recent data extraction project for a large global retailer, our AI-powered automatic product data extraction technology extracted a massive amount of data from the web three times faster than traditional methods, leaving only a handful of cases for our delivery team to create manually.

In this project, the client needed to extract unstructured data from text and descriptions, which was solved using an LLM-based solution. The unstructured description text was first extracted using our AI Scraping and then processed through the LLM’s special prompts created by the team.

What developers are saying about it

Intelligo, a background intelligence solution that relies on 1 million scraped articles per month, uses Zyte API to extract news and article data from websites. This feeds their AI-powered platform with the most accurate data available, allowing it to provide comprehensive background checks for companies and individuals.

One of their biggest challenges is getting structured data from unstructured HTML on many websites, which is solved by combining Zyte's trained AI with LLMs. The resulting solution, as attested by Nadav Shkoori, Backend Developer at Intelligo, is: "It's easy to use, and the data output is nicely structured, making it easier for us to analyze."

Since the launch of AI-powered scraping, we have been reaching out to developers in the Extract Data Community and important market players, inviting them to test the AI tool to get data fast from e-commerce websites.

Try it now with a coupon code

Every web scraping developer who does not play with AI is missing opportunities to get data faster. Try Zyte AI scraping now with the coupon code AISCRAPING2.

If you start trying Zyte AI Scraping and are still waiting to get data from a website in minutes, please get in touch with our support.

FAQ

Why are traditional web scraping tools no longer enough?

Traditional scraping tools struggle with modern websites that use complex, dynamic content like JavaScript (AJAX), infinite scrolling, and frequently changing layouts. These outdated tools rely on hard-coded rules that break whenever a site’s structure changes, leading to constant maintenance and inefficiency.

Can LLMs replace traditional web scraping methods?

No, LLMs are not an ideal replacement. While they offer flexibility, they’re often too expensive and inaccurate for large-scale web scraping. They can even hallucinate or generate incorrect data that requires manual verification. LLMs weren’t built to handle the nuanced demands of web scraping at scale.

What’s the best way to combine LLMs with web scraping?

The most effective approach is to blend the adaptability of LLMs with AI models designed specifically for web scraping. LLMs can generate parsing code or handle unstructured data, while precision models automate data extraction from structured web content, ensuring accuracy and minimizing the need for constant updates.

How does AI improve the web scraping process?

AI-driven models can automatically adapt to changes in a website’s structure, eliminating the need for hard-coded rules and reducing the maintenance burden. These models are also faster and more cost-effective at handling complex, dynamic content.

What are the main challenges of scraping dynamic content?

Dynamic content powered by JavaScript, such as infinite scrolling, continually updated product catalogs, or search-gated content, requires more sophisticated scraping techniques. These include advanced parsing, handling frequent site updates, and ensuring compliance with legal and anti-bot measures.

What makes Zyte’s approach to web scraping different?

Zyte combines machine learning-powered parsing tools with a robust API that automates request handling, ban management, and spider maintenance. This system adapts to changing website layouts and content, drastically reducing manual intervention while ensuring accurate, scalable data extraction.

Is AI scraping cost-effective at scale?

Yes, AI scraping can be cost-effective at scale because it reduces the time and resources spent on maintenance, adapts automatically to website changes, and speeds up data extraction. This makes it more efficient than traditional scraping methods that require constant tweaking and troubleshooting.