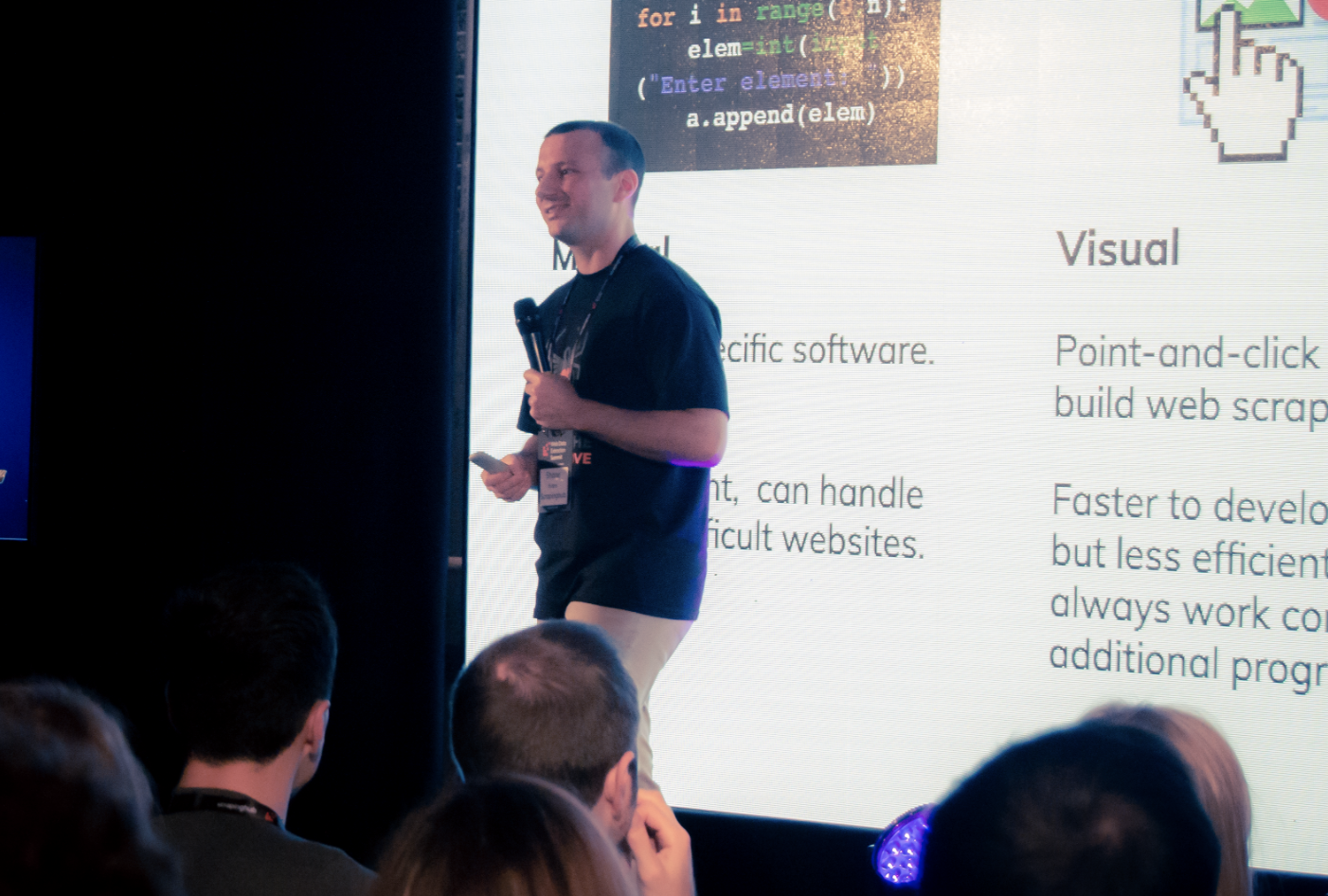

The Web Data Extraction Summit 2019

The Web Data Extraction Summit was held last week, on 17th September, in Dublin, Ireland. This was the first-ever event dedicated to web scraping and data extraction. We had over 140 curious attendees, 16 great speakers from technical deep dives to business use cases, 12 amazing presentations, a customer panel discussion, and unlimited Guinness.

Our goal with this event was to share not just our own expertise with the audience but also provide a platform for external industry experts and actual web data users to share their knowledge and experience with web data extraction. Based on the feedback we got during and after the event, it was an absolute success! The people who came learned a lot and were able to connect with others in the industry.

“The content covered pretty much all aspects of web extraction, and the talks were a good length. Not a marketing pitch in sight.”

Here is a quick overview of the day and some highlights from the talks:

To kick-off, the day, Shane Evans, CEO at Zyte talked about how web data extraction is impacting businesses and gave us his insights on how the industry will evolve.

Right after, Cathal Garvey, Data Scientist, took the stage to provide some insights into what data types, use cases, applications, and emerging trends. All these based on scraping 9 billion pages per month for thousands of Zyte customers.

The third talk was about legal compliance and GDPR in web scraping. Kate O’Brien, Legal Counsel at Zyte, talked about best practices guidelines to make sure you and your web scraper are compliant. The audience had quite a few questions about the legal implications of scraping personal data and others. It’s obviously a hot topic nowadays.

Attila Toth, Technology Evangelist, and Pawel Miech, Technical Team Lead, talked about web scraping infrastructures and problems you need to solve if you extract data from the web at scale. Also providing actual tips for data extraction developers, like how to find data fields most effectively in an HTML or how to get through captchas. A takeaway from the presentation is that the web is like a jungle and you should not expect that each website will follow the standards.

Then, Bryan O’Brien, Product Manager, showed us how the new product from Zyte Automatic Extraction (formerly AutoExtract), an AI-enabled automatic data extraction API for article and e-commerce data extraction is changing the way we extract data from the web today. Zyte Automatic Extraction uses artificial intelligence and machine learning to make news data extraction and getting product information from pages a breeze. No more need for data field selectors or XPath.

In the next talk, the two biggest topics for any successful web scraping projects were discussed - proxies and anti-ban best practices. Akshay Philar, Head of Development, and Tomas Rinke, Systems Engineer, gave us a better understanding of how anti-ban systems work and proxy management. They also exposed their best actionable tips on how to scale web scraping projects with proxies.

Going further than the usual use cases that are associated with web scraping we were able to show something new. Something that is not only interesting but actually making the world a better place. Amanda Towler (Hyperion Gray) & David Schroh (Uncharted Software) showed how web data extraction is used to fight human trafficking. They talked about how they are using web data extraction to locate potential victims of human trafficking and identify those who exploit them. In the process, they open-sourced many web scraping and data processing tools.

Mikhail Korobov, Head of Data Science, gave an entertaining and super educational talk about how machine learning can be used in web scraping. It is also how our AI web scraping tool, Zyte Automatic Extraction (formerly AutoExtract), works under the hood. Reassuring, that it works on any website and the algorithm doesn’t need a “sample” of the website structure before data extraction.

Andrew Fogg, Founder of import.io, talked about how web data is used to identify trends and inform business decisions. And took us through some unusual business questions that can be answered with web data.

Then, we had some of our customers - Just-Eat, OLX, Revuze, and Eagle Alpha - in a panel discussion on how they are leveraging web data and what are some of their challenges when it comes to web data extraction. One takeaway from this panel was that getting the data is crucial but it’s also important to be able to get actionable insights from the data.

Or Lenchner, CEO at Luminati, talked about the importance of data quality. He showed us how to tweak your web scraper to avoid common traps websites give you and what to look for on a web page to recognize bad quality data.

In the last talk, we learned about data democratization from Juan Riaza, a Software Developer at Idealista. He gave us technical insights into the usage of Apache Airflow, Scrapy Cloud, and Apache Spark together to build out a company-wide data infrastructure.

It was a busy one-day event. Packed with interesting insights from industry and technology experts, customers, and web data users. Our speakers gave their expertise and suggestions on all of the different web data extraction challenges. Proxies, anti-ban, legal, scalability, machine learning. On a higher level, we also had several talks about use cases.

The people who came to this event had the opportunity to learn from several web data extraction experts, hear amazing stories about what web data can do, connect with like-minded people, and had a great time in the Guinness Storehouse. We expect to organize the event next year, register your interest here if you want to be kept informed or apply to speak at the event next year.