The 2025 Web Scraping Industry Report - For Industry Players

For the Industry Players: Aptitude and Attitude

The stakes are high, and so is the opportunity.

The market is growing, the competition is increasing, and the resistance is intensifying.

Every day, you’re asking yourself, What are we missing that our competitors are quietly mastering? What’s our competitive edge? How do we integrate AI without overhauling everything?

What Has Shifted?

First, let’s start with the exciting trend: AI.

Aptitude: Artificial Intelligence

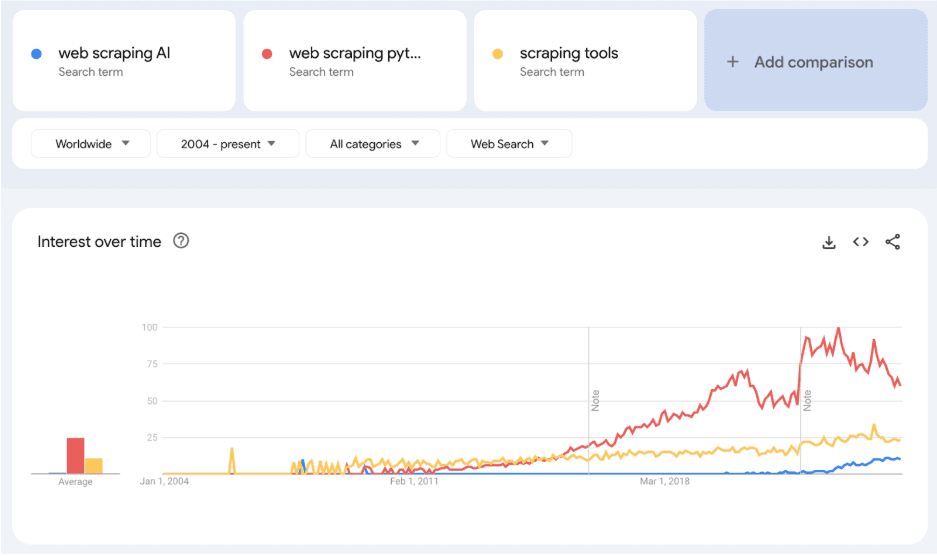

Chat GPT’s release at the end of 2022 has paved the way for the mainstream audience to start climbing up the AI ivory tower. There was an immediate uptick in people Googling for “web scraping AI” in December 2022.

(Source: Google Trends)

Machine learning has long been part of the web scraping industry, but the generative AI breakthroughs over the past two years have further cemented intelligence as table stakes in data extraction products. As they say, AI is whatever hasn't been done yet. Once it's done, it becomes just another piece of technology.

Smarter systems that adapt to dynamic websites, automate complex tasks, and extract structured data with minimal human input are now expected. More and more teams are finding ways to embed "intelligence" into every step of the process from crawling, rendering, interaction, extraction, post-processing, monitoring, and optimization.

This question touches not only the inherent dynamics of the web scraping industry between the anti-bot and anti-ban solutions, but also between the competing players in both verticals, offering different tools in the life cycle.

It isn't anymore about whether systems are getting smarter—they are. The real question is: whose cats are more intelligent than whose mice, and in what ways?

We are all in the AI arms race of how to integrate it strategically into the right components of our products. And the party is getting more crowded with LLM-jolted newcomers, filling in different pieces in the puzzle.

Attitude: Ethical and Compliant Web Data Extraction

Now here comes the less exciting part: compliance.

The competitive edge is shifting away from just technical sophistication. It’s increasingly about how well companies can navigate the legal landscape, uphold data ethics, and ensure long-term reliability.

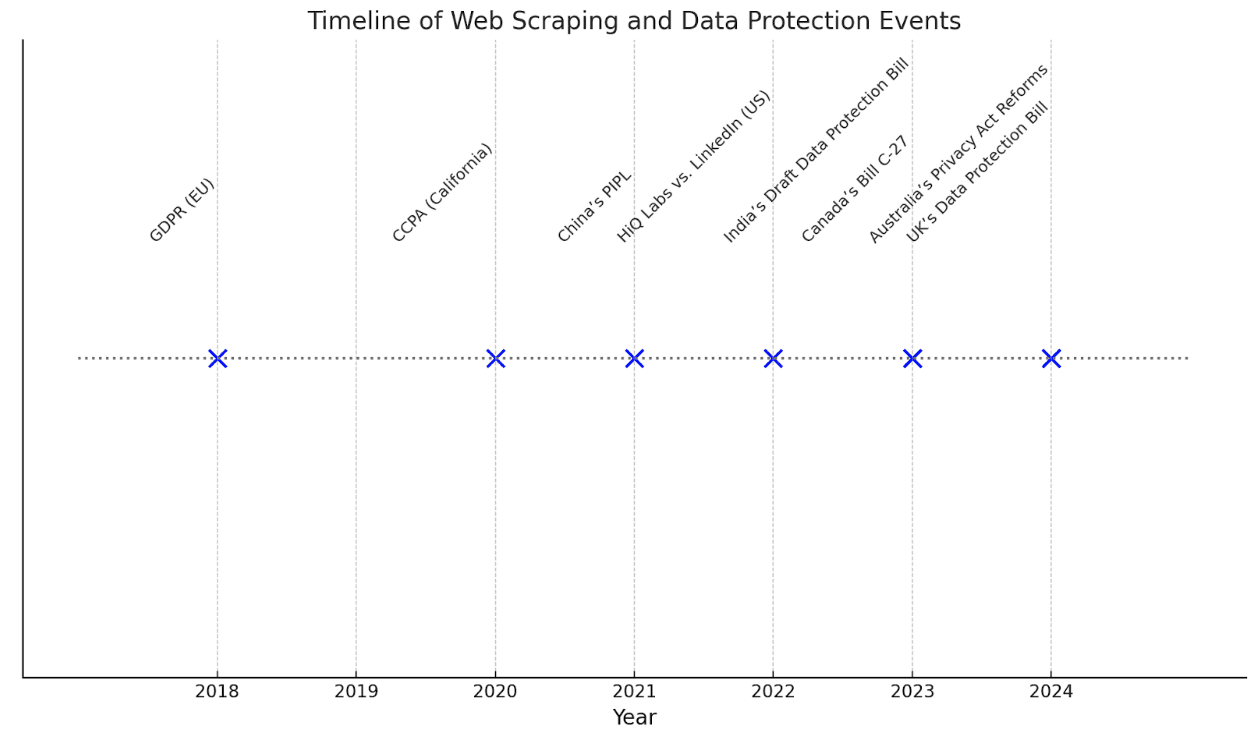

Different legal battles over the past couple of years have forced a rethink.

Different regions are introducing laws and regulations around data privacy. Navigating this maze of fragmented legal terrain requires agility and foresight to avoid fines, bans, or reputational damage.

More IP providers are now implementing stricter KYC processes, and we see an increase over the years in customers wanting to talk about and understand compliance up front.

They’re now not just asking, can you get the data? But also, how did you get it? Is it ok to scrape any and all public data? Is it ok to scrape public personal data? Can I use copyrighted data for my use case?

This shift reflects a growing demand for transparency and ethical practices.. Compliance used to be a checkbox, but now it's becoming a central pillar of competitive strategy.

First Step Toward Compliance

The simplest way to take a stand for compliance in web data extraction is to implement a clear, transparent policy aligned with established ethical guidelines, such as those from the Ethical Web Data Collection Initiative (EWDCI).

EWDCI is an industry-led and member-driven consortium of web data collection business leaders focused on strengthening public trust, promoting ethical guidelines, and helping businesses make informed data collection choices.

As Sanaea Daruwalla, Zyte’s Chief Legal Officer, explains, “The reputation of web scraping hasn’t always been the best,” with many people believing it to be illegal despite its widespread legitimate uses.

There is just one overarching web scraping law. Tangible guidelines and best practices don't exist in an official capacity. Sanaea points out that the industry is fraught with opaque laws coupled with strong data protection from governments, leaving businesses without clear guidelines for ethical practices. This lack of clarity not only fosters mistrust but also creates real-world business disadvantages. Companies seeking to use public web data often hesitate due to legal and reputational concerns, even though such data is pivotal for critical business decisions.

Zyte recognises that without clear ethical guidelines and transparency, the industry cannot achieve its full potential, which is why it has prioritised setting high standards and advocating for best practices through initiatives like the EWDCI.

Zyte has a dedicated legal team focused on compliance and are ISO 27001 certified. For many customers we talk to these attributes are no longer a “nice to have” but a strict requirement.

Zyte takes compliance and ethical web data collection very seriously because it underpins the sustainability of the web scraping industry and ensures long-term value for all stakeholders. A sustainable business must balance profitability and growth with ethical practices and social responsibility, especially in industries like web scraping, which are often misunderstood and scrutinised.

The public deserves digital peace of mind, and “scraping” doesn’t have to be a dirty word when it is done responsibly.

What about Market Trends?

Glad you asked.

There are some changes in what people are scraping and what they’re doing with it, but not in the direction we had predicted.

Data for AI

Earlier this year, we anticipated a surge in inbound data requests for AI-related use cases, building on the noticeable increase in such projects throughout 2023. As 2024 unfolded, the volume of these requests doubled compared to the previous year—but the real story lies in their value.

AI-related data requests now represent 5% of Zyte’s pipeline, a relatively small slice compared to the 59% dominated by product data. However, the average deal value of these projects is 3x the average deal value across all our data business this year, and the value of data-for-AI projects has increased by an impressive 400% YoY.

This suggests that while still not many AI companies are actively shopping for web data, those that do are making significant investments. When they buy, they buy big.

We expect the maturity in this vertical to grow and appetite follows. But as of now, we have a few ideas about why not many AI companies are relying on data vendors just yet:

For structured data collections

Mature organizations building foundation large language models; Data is core business to these organizations and most have the resources and are willing to invest to expedite data extraction in-house. There are also already arguably diminishing returns for the collection of historical web data as most of this data is already within the scope of existing models’ training data and the current majority of LLM architecture is yet optimized to tap into more real-time data.

Early-stage AI startups: They are scrappy and technically savvy. Furthermore, there are two broad categories of companies currently building in AI:

Those building LLM-wrappers: Data is not their primary concern. They just need access to foundation large language models.

Those fine tuning: At this stage they are most likely focusing on proprietary or first-party data rather than public web data. That said, this segment of customers, once their core training data pipelines have matured, holds significant potential for leveraging web data. Vertical-specific structured data and diverse machine learning applications beyond LLMs present long-tail use cases that can greatly benefit from enrichment through web data.

For unstructured data collections

Broad crawls for unstructured data means there are less banning issues. Extraction is also less complex. General web data collection becomes more of a horizontal scaling problem (throw more hardware at it). One note on this, we do notice that buyers have a higher level of comfort with less structured data now—more are requesting WARC and raw HTMLs.

By now, no one can predict nor agree on how this will unfold in the next six months. But if there is one thing that most AI researchers agree on, it is that your model is only as good as your data. Whether it’s public web data, proprietary data, synthetic data — or most likely, a combination of all three — your data will make or break your model. Data is truly your moat.

Lead Generation and Job Listings

Moving on from data for AI, there was one notable shift in how people are using data: lead generation.

Demand for data related to companies, merchants, and store locations has nearly doubled compared to last year, while the appetite for job listing data for market research and market intelligence purposes has grown by 50%.

Our hypothesis is that this surge is also somewhat tied to the macroeconomic landscape. In a time of economic uncertainty, businesses are looking for ways to de-risk their strategies by relying on publicly available data as proxy indicators for market dynamics.

Merchant, Company, and Location Data: The increased demand suggests businesses are starting to ramp up their marketing outreach, as the global economy crawls out of the geopolitical uncertainties. Retail companies rev up their marketing efforts by targeting businesses in specific regions (most requests target business information in specific geo-locations), while real estate developers focus on areas with rising commercial property listings, expecting that these locations may soon see increased activities.

Job Listings Data: The demand for job listing data is also rising, as businesses track hiring trends, skill demands, and salary benchmarks as indicators of industry health. An uptick in job postings can signal growth in specific sectors or locations, allowing businesses to adjust their strategies and prepare for changes in market conditions.

M&A and Consolidation

Zooming out to inspect our own industry, in the coming years, we expect more mergers and acquisitions (M&A) as companies look to consolidate their offerings. The trend is clear—point solutions are becoming less common as vertically-focused vendors hit that growth bottleneck and therefore are forced to expand horizontally and provide more comprehensive, integrated services.

We see companies that once specialised in one area are expanding their portfolios. Some are now offering complete web scraping APIs, while others are integrating data centre proxies alongside their residential services.

The move toward consolidation is also driven by the realisation that R&D on a large scale is expensive and resource-intensive. Rather than investing heavily in creating a comprehensive suite of tools, many vendors are opting to acquire or partner with other companies to quickly fill gaps in their offerings.

What to Watch Out For

1. Jumping Blindfolded onto the AI Bandwagon

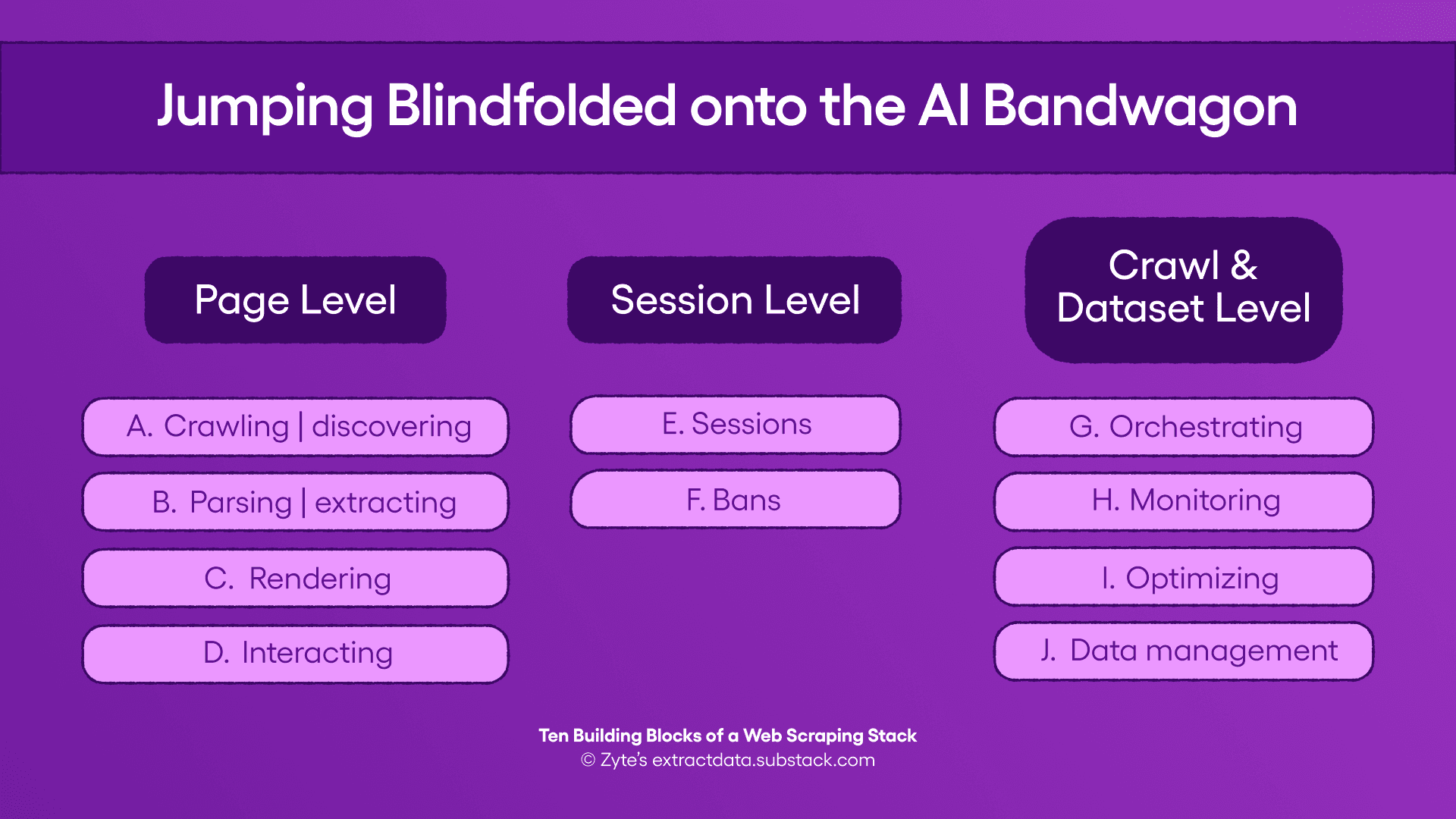

A closer look at the majority of AI-powered features launched in the web scraping space this year reveals a pattern of innovation in areas A, B, and F. Some industry players are already laying the groundwork for expansion in area J.

As foundation model providers continue to innovate, we also expect to see more launches targeting areas D and E. One unsubtle clue here is Anthropic’s advancements in computer use. There’s also a surge of launches and ongoing research into agentic AI frameworks that means that capabilities in area G and beyond are inevitable. Think of your own army of interns hunting, discovering, and scraping web data for you.

The key question for you is: How does this fit with your product roadmap? How to make sure your team is not rushing to capitalize on the latest trends without a clear strategy?

Also beware of tool fatigue in the market. Differentiating your product in a market flooded with vaguely titled “AI-powered” tools means focusing on tangible, real-world benefits rather than buzzwords.

2. The Wrong AI for the Wrong Problem

Are you applying the right AI techniques to the right problems? For all the hype and potential that large language model (LLM) offers, it is not a silver bullet. Relying on LLMs for web scraping is like using your laptop to keep your shirts from getting wrinkled. A large part of scraping is a deterministic task while LLMs are probabilistic creatures.

Here at Zyte we found that a combined approach commonly referred to as composite AI—layering multiple AI techniques, leveraging their specific strengths—to be the pragmatic sweet spot to balance cost and accuracy.

We landed on this winning combination, each with very different cost structures:

Lean, supervised machine learning models for standard schemas.

Small language models to refine and extend schemas.

Large language models for unstructured data.

3. Complacency for Those Currently Winning in The Scaling Game

In the section for Developers, we highlighted that while LLM-tools are entering the market and making scraping easier, “scraping ≠ scaling.” For now, scaling remains an art that many incumbents have perfected.

But this advantage may not last forever. Many of these tools are already incorporating features like CAPTCHA management and adaptive scraping into their development roadmaps, steadily closing the gap on scaling capabilities. And as foundation models become commoditised, LLM-powered tools are also likely to become more cost-effective.

How do you stay ahead? Take a note from these newcomers: their strength lies in exceptional UX design. By improving the time-and-ease-to-scrape in your own solutions, you can maintain your edge of scale-ready solutions.

Here’s a table to help you further assess your strategy.

Table 1: Scraping and Scaling: Focus of Functionalities

| Aspect | Scraping Focus | Scaling Focus |

|---|---|---|

| Extraction | Ease of extraction (low-code interfaces, AI-driven selectors). | Efficiency (minimizing costs for proxies, bandwidth, and processing to handle millions of pages efficiently). |

| Technology | Support for dynamic content (JavaScript rendering, headless browsing). | Proxy management (IP rotation, session handling). |

| Ban handling | Adaptability to site changes (auto-detection of structural changes). | Anti-bot handling technologies (CAPTCHA management, behavioral emulation). |

| Infra | Specialized use cases and challenges (e.g., scraping product listings, job postings). | Infrastructure robustness (load balancing, distributed systems) and resilience (redundancy, uptime guarantees). |

Leading today doesn’t guarantee staying on top tomorrow. Don’t let complacency define your fate.

Things to Remember

Dust off and revisit that wish list of data project ideas you once shelved due to budget constraints.

Factor in total cost of ownership (TCO), including setup, maintenance, and vendor support costs, before committing. Use clear metrics to evaluate whether web scraping is delivering the value that aligns with your business goals. ROI isn't just about financial returns—it’s also about time saved and risks mitigated.

Partner with vendors who are transparent about their data sourcing methods and adhere to compliance frameworks, avoiding reputational and financial risks.